Beyond Algorithms: Human-AI Partnership in Border Security

Why Human-AI Partnership Matters in Border Security

Groundbreaking European research reveals that the most advanced AI border security systems fail without proper consideration of human factors. The future of border protection lies not in replacing humans, but in creating powerful partnerships between artificial intelligence and human expertise.

The AI Performance Paradox

For years, developers assumed that creating more accurate AI algorithms would automatically lead to better border security. They were wrong. Recent comprehensive studies across 40 different research projects reveal a startling paradox: technically perfect AI systems can actually reduce overall security effectiveness when human factors are ignored.

Critical Finding: AI system accuracy metrics like precision and recall don’t predict operational success in real-world border security scenarios.

The research demonstrates that border guards operating AI-enhanced systems face unique challenges. They must make life-critical decisions based on AI recommendations while maintaining situational awareness in complex, rapidly evolving environments. Systems designed without considering this human element create cognitive overload rather than enhanced capability.

The Three Pillars of Situational Awareness

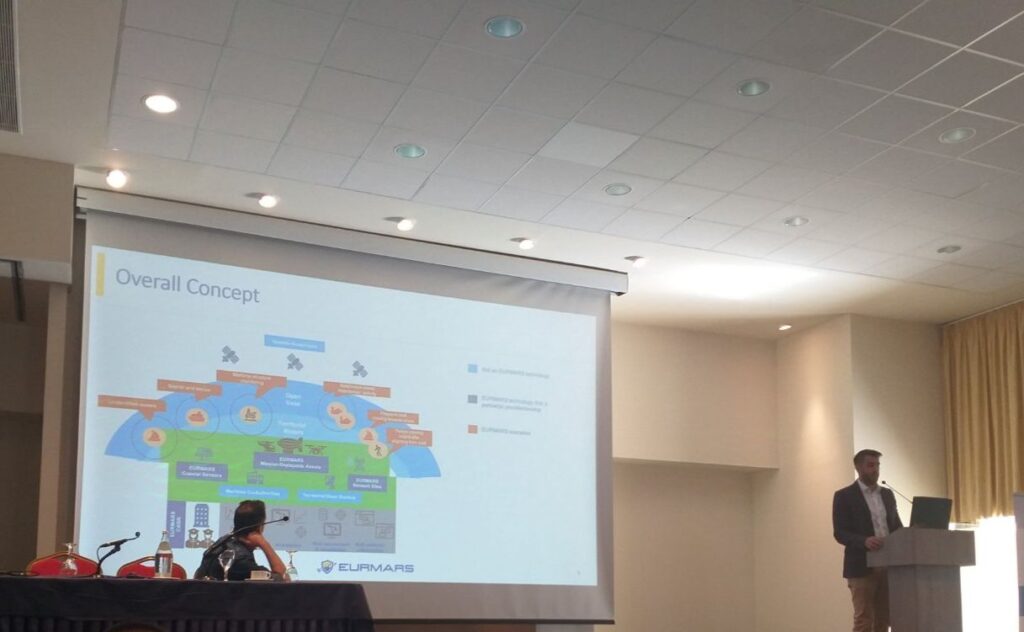

European researchers have developed a revolutionary framework for understanding how humans and AI can work together effectively. Based on established psychological principles, this three-level approach transforms how we think about AI integration in security systems.

The OODA Loop Revolution

The research adapts a proven military decision-making model the OODA loop (Observe, Orient, Decide, Act) to border security contexts. This framework provides a structured approach to human-AI collaboration that enhances rather than replaces human decision-making.

Traditional AI Approach

• AI makes autonomous decisions

• Humans serve as backup systems

• Focus on technical accuracy

• Limited human understanding of AI reasoning

Human-AI Partnership

• AI enhances human decision-making

• Humans maintain control and oversight

• Focus on operational effectiveness

• Transparent AI reasoning processes

This approach recognizes that in security-critical domains, human oversight isn’t just desirable—it’s essential. The responsibility for decisions that affect human lives cannot be delegated to algorithms alone.

Scientific Breakthroughs in Human-AI Systems

The European research reveals several key insights that are transforming how we approach AI in border security:

Transformative Research Findings

AI systems with multiple algorithms can create architectures so complex that even expert users struggle to understand them. This complexity undermines trust and effective utilization.

In security domains, human operation is required by law and ethics. “Man-out-of-the-loop” designs are not viable options, making human-AI collaboration essential rather than optional.

Border security operations are characterized by time-critical decision-making under pressure. AI systems must enhance rapid decision-making rather than introduce delays or confusion.

While AI can provide recommendations and analysis, human authorities bear legal and moral responsibility for decisions. Systems must support this accountability structure.

Practical Applications and Results

The research demonstrates concrete benefits of human-AI partnership approaches in real border security scenarios:

Enhanced Situational Awareness: AI systems that work with human cognitive processes rather than against them significantly improve operational awareness and threat detection.

Reduced False Alarms: Human oversight helps filter AI-generated alerts, reducing false positives that can overwhelm operators and lead to alert fatigue.

Improved Decision Quality: The combination of AI data processing and human judgment produces better decisions than either could achieve alone.

Increased Operator Confidence: When operators understand and trust AI systems, they’re more likely to use them effectively and appropriately.

The Future of Border Security AI

This research points toward a future where AI and human intelligence work in seamless partnership. The goal isn’t to create autonomous border security systems, but to develop AI that makes human operators more effective, more accurate, and more capable.

The implications extend beyond border security to any domain where critical decisions must be made under pressure with incomplete information. The principles of human-AI partnership developed in this research provide a roadmap for responsible AI deployment in high-stakes environments.

Looking Ahead: The most successful border security systems of the future will be those that best combine artificial intelligence with human wisdom, creating partnerships that are more powerful than either could be alone.

A New Paradigm for Security Technology

The European research on human-AI partnership in border security represents a fundamental shift in how we approach technology development for critical applications. Rather than pursuing autonomous systems that replace human judgment, we should focus on creating partnerships that enhance human capabilities.

This human-centered approach to AI development offers the best path forward for creating border security technology that is not only technologically advanced but also operationally effective, ethically sound, and genuinely useful to the people who depend on it every day.